Does anyone here have any experience weith such matters? I’ve been wrapped up in other personal projects for a while, but my wife is a HUGE Jerry Springer fan.

She recently found out that towards the end of his career, he was giving out personalised christmas wishes to some lucky fans. I would completely blow her mind if i could figure out how to make her one.

I feel like i might be out of time, I’m certain i could figure it out given a month but I don’t have that kind of time to learn it all from zero. I’ve got several other gifts i’m working on from software to 3d printed designs to hardware hacks for a variety of devices, so any help (even just a nudge in the direction of an appropriate model or two from huggingface) would be amazing!

I know that there are decent models out there for adding lip-sync to a static image, and likewise there are models for cloning voices. There must be something slightly more advanced that i could use locally, offline without paying ridiculous amounts of money.

If not, there’s always her birthday or next year.

Thanks in advance and happy solstice celebrations of your choice!

After juming through a bunch of hoops I managed to do it. It’s mildly dissatisfying, mostly because I didn’t build it myself, but by leveraging several free services online I was able to create a limited digital duplicate of the man himself.

Now i’m just keeping my fingers crossed that the digital picture frame that I ordered a month ago really will be here in time.

I hope everyone else is having a successful season. I’ve got a couple things left to do yet before the 25th, but it’s all well in hand now.

Only just saw this, but I might be able to help if you want to do something similar in the future. I recently managed to get a quantized version of Wan2.2 (a video generation model) running locally.

What did you use for speech? A few years ago I tried using ElevenLabs and voices ended up horribly distorted after a few seconds if they actually managed to sound right in the first place.

Interesting! I stopped short of running local models and just utilized several free online services to get to where i wanted to be, or at least close enough. What kind of system resources (RAM, GPU) are you running it on?

I’m not a wealthy man and have very limited computing power, but I do have a lot of time, so it balances out in the end. Can you tell me if the model is multimodal? As in, can in accept more than just text prompts?

I ended up with just a ‘talking photo’ type deepfake, but I’d really like to be able to create a fully interactive AI avatar.

It’s not a pressing concern for me at the moment, I’ve got a lot of projects on the go, but I’d love to discuss it with you some more. @genesis has generously suggested that I might be fit for a video interview, but I’d be far more comfortable hiding behind the anonymity of an AI generated avatar.

It depends on which version you download. The T2V model only uses text as input, but the I2V one can accept an image as a starting frame, or a starting and ending frame, in addition to a prompt. There’s also an S2V (sound to video) model, which might be useful if you already have audio that you want the video to sync with, though I haven’t tried it.

I recently got my hands on a used RTX 3090 (24 GB VRAM), though I’ve heard of people managing to get it running with less VRAM than that.

As far as avatars for anonymity purposes, there are also 3D rigs like vtubers use. That might be an option if you can’t get video generation working to your satisfaction, and would also have the benefit of being useable in realtime.

I’m curious, I must say that I’m peripherally aware of a great variety of these emergent properties of modern AI. I’ve read about the live or nearly live avatars for use in video calls and such, the audio to video sounds really neat, but I’m not as interested in playing and experimenting with a single model(although tinkering is a favorite pastime) as I am in grand interconnected projects.

Most of my use cases are niche, or step on the toes of various copyright holders so I’ll generally keep my products for personal consumption.

It’s been about three to four years since I last experimented with running image and text generating models locally, so I’m a bit out of touch in that regard and sadly over 90 percent of my computing hardware is even older than that. The only decent piece of hardware in the house is my second hand Radeon Rx 6750 xt with I don’t even know how much ram (also, I’m super behind on PC parts lore, what do you mean vram? The card has a physical persistent storage space? Just the fact that ddr4 is out of date blows my mind)

I highly doubt that I could handle live image processing on any of my current hardware, but I never throw anything away, so I could in theory commit days of uninterrupted computing time on (much) lower end hardware.

Just as a general example of something I might like to try is to build a workflow utilising multiple (ideally open source) generative AI models in order to ‘watch’ a given video and extrapolate the context of the scene and emotional tone of the conversation. Then turn that into descriptive text, synchronized with the video play back (like subtitles) while using another model to clone the people as digital avatars and yet another that could combine the digital avatars and the context/tone ‘subtitles’ to create immersive sign language interpreters which could be superimposed over the original video.

It’s a lot and like I said, it’s definitely niche but I feel like if I can do that I can wrangle any kind of content generation required for any tasks I’d like.

But, I’m getting ahead of myself, that’s on the horizon and I need to start somewhere.

**Edit** After reading this back, I feel like I might have given the impression that I’m up to no good, I assure you that I’m not out to scam anyone nor share my creations beyond their tiny intended audience.

@steve You’re maybe the man to ask about this, what would the legality copyright wise be if I made a digital AI avatar of the C2 norn doll? Not that I think you’ve reflected on the licensing in the last twenty years, but I suspect it’d be covered as transformative content.

Looking it up, the Rx 6750 xt has 12GB VRAM. VRAM is RAM that is part of the GPU, and can be quickly accessed by it. If a model fits entirely within your GPU’s VRAM, it can be run much faster, since it isn’t necessary to constantly load parts of the model from RAM, or worse, the hard drive. Anyway, I’d expect you to be able to easily run some of the smaller image generation models, such as Stable Diffusion 1.5 or XL. You might be able to get some fancier stuff like Qwen-Image or a video generation model running if they’re quantized enough. (If you’re not familiar with quantization, you can think of it a bit like lossy compression. But instead of an image or audio file, it’s the model. It can help you fit a model into VRAM that would otherwise be too large, but it may not perform as well.) A lot of software assumes Nvidia GPUs though, so you might have to do some tinkering to get things running.

It’s possible that there are some machine learning models used for live avatars, but I don’t know much about that topic, or what kind of specs you would need to run one in realtime, which is why I suggested a 3D rig. Perhaps not as fancy, but you only need hardware that’s capable of rendering a 3D model + running motion capture/analysis to map your movements to the model.

There are some models such as Whisper that are pretty light weight that can generate subtitles from audio. I’m not sure about analyzing emotional tone/context of a scene, though. That would probably be a fair bit trickier.

I see, upon a little investigation, I discovered that VRAM now most commonly refers to Video RAM. I thought that there perhaps there was some sort of super low latency NAND onboard the graphics card which would allow for the expansion of physical gddr to a page file enabling Virtual RAM. Thus simulating more memory but at a lower cost and speed.

As I said before, speed is not an issue for me. I can let a system run for a week or more if need be. With that in mind, and given that you’ve clearly got a bit more time invested in generative AI than I have, do you know if there is some sort of fundamental architectural problem with running a model designed for AMD GPUs on an Nvidia card? Or is it mostly down to loss of efficiency?

I doubt that anyone really does so any more, but you used to be able to run pretty much everything I played with on straight CPU processing if you just tweaked a few parameters. Obviously this leads to large power bills and long response/processing times, but it’s a place to start.

given that you’ve clearly got a bit more time invested in generative AI than I have

Huh, funny to think about. I don’t really consider myself especially knowledgeable when it comes to machine learning. But I loathe “software as a service” and am pretty good with computers, so I probably have put more time into it than a lot of people, even if a good chunk of that time was just navigating dependency hell to run other people’s code 😛

The models themselves are independent of whatever hardware used to run them. They’re just a big file full of the weights and biases of whatever neural network. There’s nothing stopping you from running any model on any sort of hardware, so long as the software you’re using to abstract away all the fiddly bits supports it. Quite a bit of software has only bothered to support the CUDA libraries, which are Nvidia’s GPU acceleration libraries. If it’s open source, you can modify the code to add support for other GPUs or CPU, or see if someone else has already done it.

CPU can be done, and will work just fine with smaller models. The reason GPUs are popular for larger models is that they are very good at parallel processing, which is good for quickly calculating information about a large number of vertices (as used when playing a video game or rendering a 3D scene), and also happens to be good for quickly calculating the outputs of a large number of neurons.

For a bit of perspective, a modern CPU tends to have between 4 and 16 cores, meaning it can run 4 to 16 instructions in parallel. Even a somewhat older GPU has hundreds to thousands of cores. (Note that it’s not a 1:1 comparison, since a CPU core can execute a much wider variety of instructions (some of which can be fairly complex!), whereas a GPU core is more specialized. And even between GPUs (especially ones of different brands) it’s not necessarily straightforwards, since there’s other factors to take into account, such as clock speed and memory bandwidth, but at that point we’d be getting beyond things that I know much of anything about.) Your RX 6750 XT has 2,560 cores, for instance.

Honestly, this is just all curiosity at the moment. I’ve got a dozen different projects on the go at any time and not enough hours in the day to do them all justice. I like all the fiddly stuff and really just the idea of getting involved with running and tweaking things locally. I feel like the best pathway to really understand a thing is just to dive in and get your hands dirty.

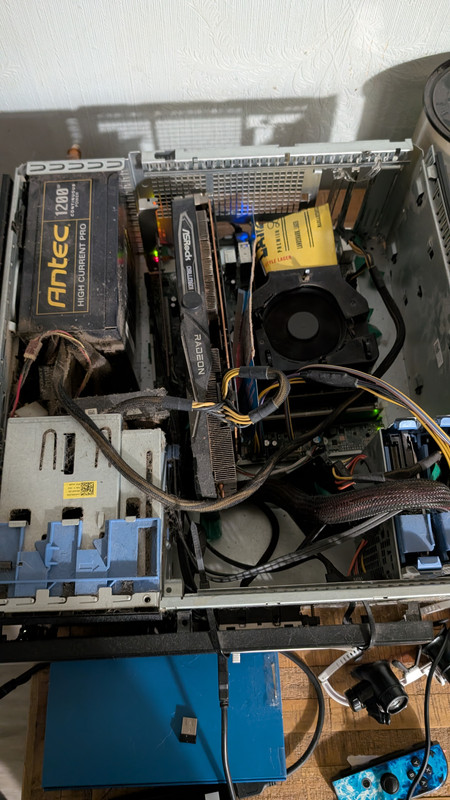

Honestly, in my situation the best piece of hardware that I have for running ai would be my Pixel 8 phone, I think it’s supposed to have some some of tensor witchcraft. I had no idea how far GPUs have come in the last 20 years honestly. I haven’t had the money to build a new pc in years, and i tend to use second hand business machines. My current rig is a bit of a frankenstein, not a single brand new part in it, I needed to build an adaptor to make the motherboard fit in the only case I had on hand in order to accomodate the GPU and the bigger power supply but it gets the job done.

I doubt your Pixel 8 comes anywhere close to your RX 6750 XT. I’m sure it’s a great phone, but the “tensor witchcraft” is probably more along the lines of running a lightweight voice recognition model, which is a far cry from the sort of video analysis you’re talking about.

As far as your PC, hey, whatever works! Though, I would suggest a good cleaning with some canned air.

Oh I know, my house is so dusty. I blow it off about once a month. Well, sometimes anyway.

I suppose that my steam deck might outperform the desktop GPU, but I don’t really want to generate that kind heat in it for an extended time. When it breaks, that’s it. My soldering skills have been tested in the past and found to be lacking.

Based on the specs I looked up, the RX 6750 XT is significantly faster than the steam deck’s GPU. I agree that you wouldn’t want to use your steam deck for something like that, regardless. A compact device like that probably doesn’t have the best cooling.

That’s really interesting, perhaps it’s other bottlenecks in my system then, because the steamdeck has consistently outperformed my desktop computer in terms of smoothness, framerate and overall visual quality on pretty much every game I’ve thrown at it. I also use it for editing some of my really detailed meshes for 3d printing(anything over 6 million vertices and the desktop pc becomes unbearably slow), and in that respect the performance is WAY better than my pc.

I think it’s got an APU, whatever that means, too many processing units out there nowadays, CPU, GPU, APU, NPU. I consider myself fairly savvy still, but all of the changes in the tech scene of the last twenty years have left me feeling like a bit of a luddite.